For the pdf slides, click here

K-means Clustering vs Mixtures of Gaussians

K-means clustering

K-means clustering: problem

Data

- DD-dimensional observations: x1,…,xNx1,…,xN

Parameters

- KK clusters’ means: μ1,…,μK

- Binary indicator rnk∈{0,1}: if object n is in class k

Goal: find values for {μk} and {rnk} to minimize the objective function (called a distortion measure) J=N∑n=1K∑k=1rnk‖xn−μk‖2

K-means clustering: solution

Two-stage optimization

- Update rnk and μk alternatively, and repeat until convergence

- Resembles the E step and M step in the EM algorithm

E(expectation) step: updates rnk.

- Assign the nth data point to the closest cluster center rnk={1 if k=argminj‖xn−μk‖20 otherwise

M(maximization) step: updates μk

- Set cluster mean to be mean of all data points assigned to this cluster μk=∑nrnkxn∑nrnk

Mixture of Gaussians

Mixture of Gaussians: definition

Mixture of Gaussians: log likelihood logp(x)=log{K∑k=1πk⋅N(x∣μk,Σk)}

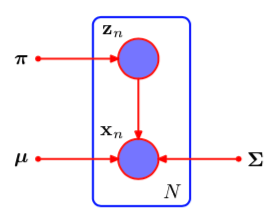

Introduce a K-dim latent indicator variable z∈{0,1}K zk=1(if x is from the k-th Gaussian component)

The marginal distribution of z is multinomial p(zk=1)=πk

- We call the posterior probability as the Responsibility that component k takes for explaining the observation x γ(zk)=p(zk=1∣x)=πk⋅N(x∣μk,Σk)∑Kj=1πj⋅N(x∣μj,Σj)

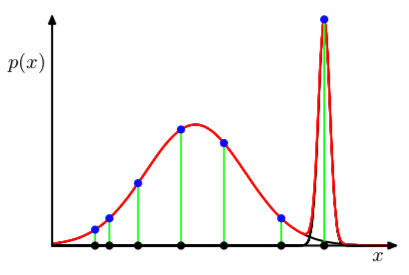

Mixture of Gaussians: singularity problem with MLE

Problem with maximum likelihood estimation: presence of singularities: there will be clusters that contains only one data point, so that the corresponding covariance matrix will be estimated at zero, thus the likelihood explodes

Therefore, when finding MLE, we should avoid finding such singularity solution and instead seek well-behaved local maxima of the likelihood function: see the following EM approach

Alternatively, we can to adopt a Bayesian approach

Figure 1: Illustration of singularities

Conditional MLE of μk

- Suppose we observe N data points X={x1,…,xN}

Similarly, we write the N latent variables as Z={z1,…,zN}

Set the derivatives of logp(X∣π,μ,Σ) with respect to μ to zero 0=N∑n=1γ(znk) Σk (xn−μk) Then we obtain μk=1NkN∑n=1γ(znk) xn where Nk is the effective number of points assigned to cluster k Nk=N∑n=1γ(znk)

Conditional MLE of Σk and πk

Similarly, setting the derivatives of log likelihood wrt Σk, we have Σk=1NkN∑n=1γ(znk) (xn−μk)(xn−μk)⊤

Use Lagrange multiplier to maximize log likelihood wrt πk under the constraint that all πk add up to one: logp(X∣π,μ,Σ)+λ(K∑k=1πk−1) we get the solution πk=NkN

The above results on μk,Σk,πk are not closed-form solution because the responsibilities γ(znk) depend on them in a complex way.

EM algorithm for mixture of Gaussians

Initialize μk,Σk,πk, usually using the K-means algorithm.

E step: compute responsibilities using the current parameters γ(znk)=πk⋅N(xn∣μk,Σk)∑Kj=1πj⋅N(xn∣μj,Σj)

M step: re-estimate the parameters using the current responsibilities, where Nk=∑Nn=1γ(znk) μnewk=1NkN∑n=1γ(znk) xnΣnewk=1NkN∑n=1γ(znk) (xn−μk)(xn−μk)⊤πnewk=NkN

Check for convergence of either the parameters or the log likelihood. If not converged, return to step 2.

Connection between K-means and Gaussian mixture model

K-means algorithm itself is often used to initialize the parameters in a Gaussian mixture model before applying the EM algorithm

Mixture of Gaussians: soft assignment of data points to clusters, using posterior probabilities

K-means can be viewed as a special case of mixture of Gaussian, where covariances of mixture components are ϵI, where ϵ is a parameter shared by all components.

- In the responsibility calculation, γ(znk)=πkexp{−‖xn−μk‖2/2ϵ}∑jπjexp{−‖xn−μj‖2/2ϵ} In the limit ϵ→0, for each observation n, the responsibilities {γ(znk),k=1,…,K} has exactly one unity and all the rest are zero.

EM Algorithm

The general EM algorithm

EM algorithm: definition

Goal: maximize likelihood p(X∣θ) with respect to the parameter θ, for models having latent variables Z.

- Notations

- X: observed data; also called incomplete data

- θ: model parameters

- Z: latent variables, usually each observation has a latent variable

- {X,Z} is called complete data

- Log likelihood

log p(X∣θ)=log{∑Zp(X,Z∣θ)}

- The sum over Z can be replaced by an integral if Z is continuous

- The presence of sum prevents the logarithm from acting directly on the joint distribution. This complicates MLE solutions, especially for exponential family.

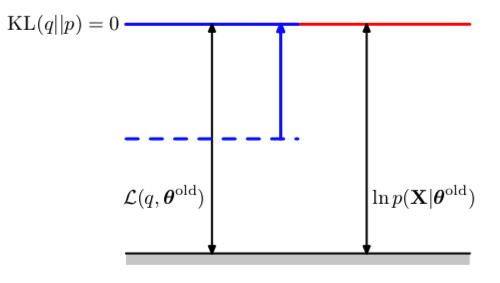

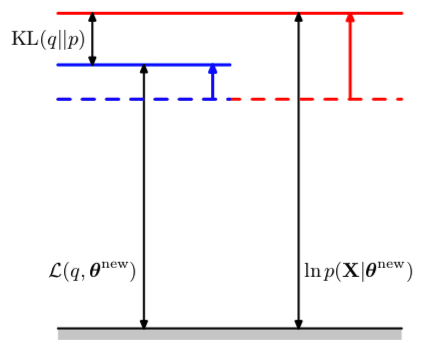

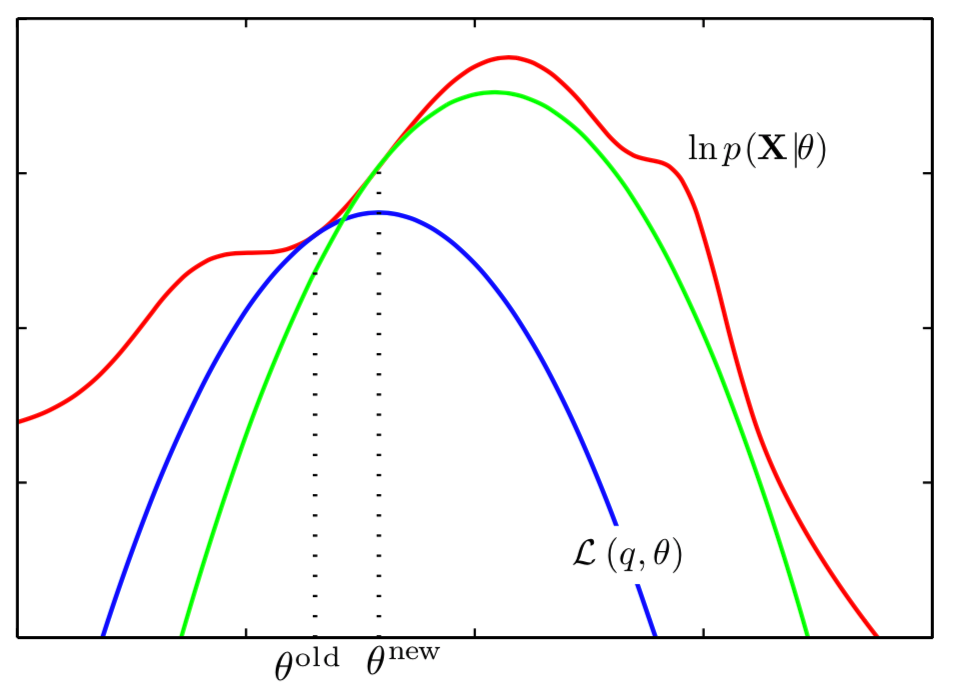

General EM algorithm: two-stage iterative optimization

Choose the initial parameters θold

E step: since the conditional posterior p(Z∣X,θold) contains all of our knowledge about the latent variable Z, we compute the expected complete-data log likelihood under it. Q(θ,θold)=EZ∣X,θold{logp(X,Z∣θ)}=∑Zp(Z∣X,θold)logp(X,Z∣θ)

- M step: revise parameter estimate

θnew=argmaxθQ(θ,θold)

- Note in the maximizing step, the logarithm acts driectly on the joint likelihood p(X,Z∣θ), so the maximizating will be tractable.

Check for convergence of the log likelihood or the parameter values. If not converged, use θnew to replace θold, and return to step 2.

Gaussian mixtures revisited

- Recall that latent variables Z∈RN×K : znk=1(if xn is from the k-th Gaussian component)

- Complete data log likelihood

logp(X,Z∣μ,Σ,π)=N∑n=1K∑k=1znk{logπk+logN(xn∣μk,Σk)}

- Comparing this with incomplete data log likelihood in Eq , we have the sum over k and logarithm interchanged. Thus, the logarithm acts on Gaussian density directly.

Figure 2: Mixture of Gaussians, treating latent variables as observed

Continue: Gaussian mixtures revisited

Conditional posterior of Z p(Z∣X,μ,Σ,π)∝N∏n=1K∏k=1[πkN(xn∣μk,Σk)]znk Thus, the conditional posterior of {zn} are independent

Conditional expectations EZ∣X,μold,Σold,πold znk=γ(znk)old

Thus the objective function in the M-step EZ∣X,μold,Σold,πold logp(X,Z∣μ,Σ,π)=N∑n=1K∑k=1γ(znk)old{logπk+logN(xn∣μk,Σk)}