For the pdf slides, click here

Notations in this chapter

- : a matrix which contains is missing data

- : the th column in

- : all but the th column of

- : a missing indicator matrix

- is missing and is observed

Missing Data Pattern

Missing data pattern summary statistics

- When the number of columns is small, we can use the

md.patternfunction inmiceto get missing data counts of all combinations among columns

library(mice);

md.pattern(pattern4, plot = FALSE)## A B C

## 2 1 1 1 0

## 3 1 1 0 1

## 1 1 0 1 1

## 2 0 0 1 2

## 2 3 3 8- When the number of columns is large, we can use the

md.pairsfunction inmiceto check the counts of each of the four pairwise missingness patterns (rr,rm,mr, andmm)

Inbound and outbound statistics: measure pairwise missing patterns

- Proportion of usable cases, i.e., inbound statistics for imputing variable from variable :

total cases where is missing but is observed over total missings in

- When imputing , we can use this statistic to quickly identify which variables to use

- Outbound statistic measures how observed data in connect to the missing data in

Different imputation strategies for different missing patterns

Monotone data imputation

- for monotone missing data pattern

- imputations are created by a sequence of univariate methods

Joint modeling (JM)

- for general missing patterns,

- imputations are created by multivariate models

Fully conditional specification (FCS, aka chained equations)

- for general missing patterns,

- imputations are drawn from iterated conditional univarate models

- Usually, FCS is found better than JM

Block of variables, hybrid imputation between JM and FCS

Monotone data imputation

A monotone missing pattern: the columns of can be ordered such that for any row, if is missing, then all columns to the right of are also missing

Suppose the variables with missings are ordered as , and the variables without missings are denoted as . Then the monotone missing imputation is

- Impute from

- Impute from

- …

- Impute from

Fully Conditional Specification (FCS)

Fully conditional sepcification (FCS): similar to Gibbs sampling

FCS specifies the multivariate distribution through a set of conditional densities

- The conditional density is used to impute given (including the most recent imputed values).

- We can use the univariate imputation method introduced in Chapter 3 as building blocks

- To initialize, we can impute from the marginal distributions

- One iteration consists of one cycle through all . Total number of iterations can often be low, e.g., 5, 10, or 20.

For multiple imputation, perform this process in parallel for times

Convergence of FCS (and in general of a Markov chain)

Irreducible: the chain must be able to reach all interesting parts of the state space

- Easy; users have large control over the interesting parts.

Aperiodic: the chain should not oscillate between different states

- A way to diagnose is to stop the chain at different points, and make sure stopping point does not affect statistical inferences

Recurrence: all interesting parts can be reached infinitely often, at least from almost all starting points

- May be diagnosed from traceplots

Compatibility

- Two conditional densities , are compatible if

- a joint distribution exists, and

- it has and as its conditional densities

FCS is only guaranteed to work if the conditionals are compatible

The MICE algorithm (the FCS implemented in

micepackage) is ignorant of the non-existence of joint distribution, and imputes anyway.- Empirical evidence suggests the estimation results may be robust against violations of compatibility

Number of FCS iterations

Why can the number of iterations in FCS be low (usually 5-20)?

- The imputed data can have a considerable amount of random noise

- Hence if the relations between the variables are not strong, the autocorrelation over iteration may be low, and thus convergence can be rapid

Watch out for the following situations:

- the correlations between ’s are high

- missing rates are high

- constraints on parameters across different variables exist

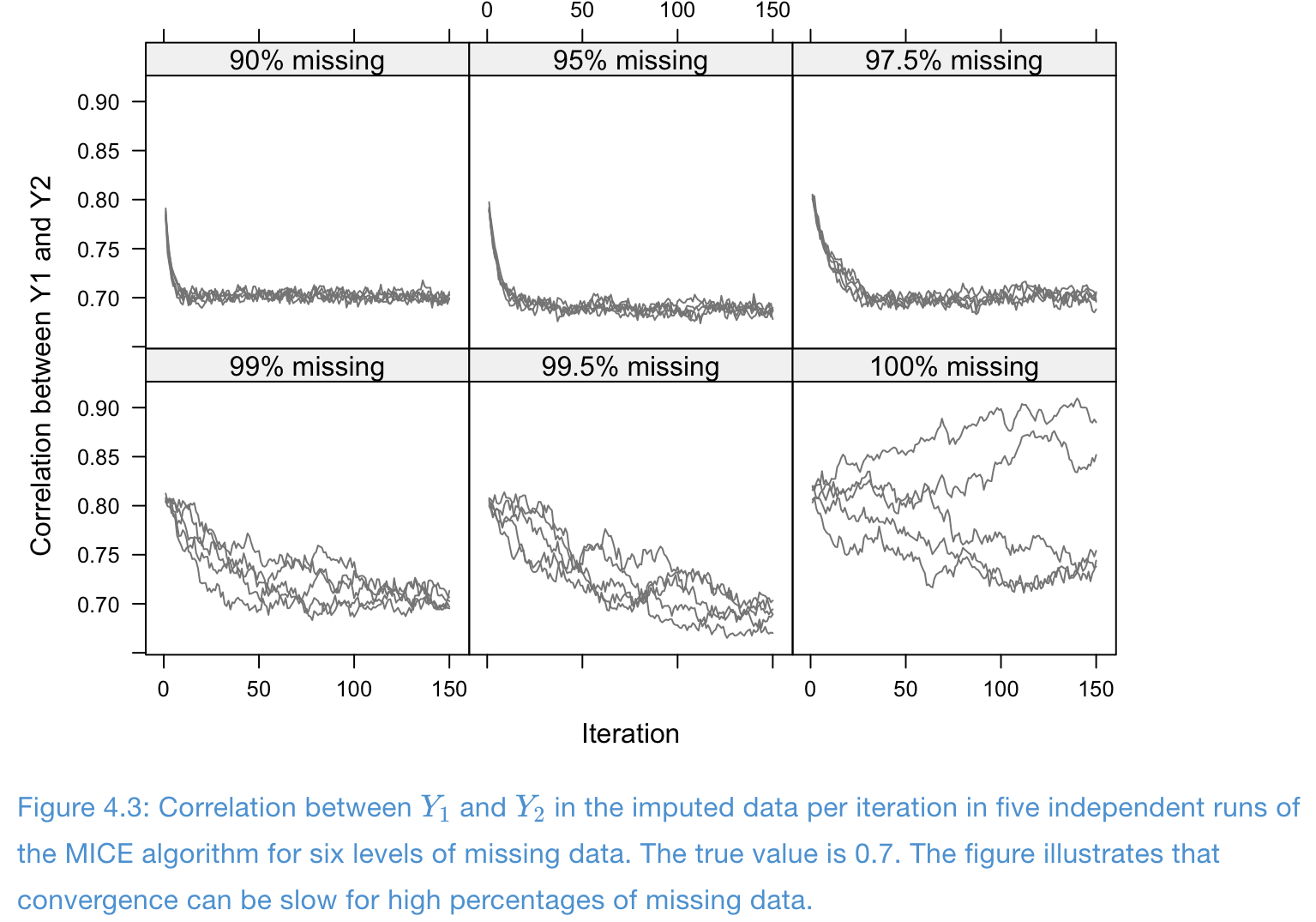

Example of slow convergence: design of simulation

- One completed covariate and two incomplete variables

- Data are draw from multivariate normals with correlations

- Total sample size , and completely observed cases

- Imputation models are normal linear regressions (PMM)

Example of slow convergence: traceplots

- Missing problem with high correlation and high missing rates: convergence is poor

References

Van Buuren, S. (2018). Flexible Imputation of Missing Data, 2nd Edition. CRC press.