For the pdf slides, click here

Complete-case (CC) analysis

Complete-case (CC) analysis: use only data points (units) where all variables are observed

Loss of information in CC analysis:

- Loss of precision (larger variance)

- Bias, when the missingness mechanism is not MCAR. In this case, the complete units are not a random sample of the population

In this notes, I will focus on the bias issue

- Adjusting for the CC analysis bias using weights

- This idea is closed related to weighting in randomization inference for finite population surveys

Weighted Complete-Case Analysis

Notations

- Population size , sample size

- Number of variables (items):

- Data: , where and

- Design information (about sampling or missingness):

Sample indicator: ; for unit ,

Sample selection processes can be characterized by a distribution for given and .

Probability sampling

Properties of probability sampling

Unconfounded: selection doesn’t depend on , i.e.,

Every unit has a positive (known) probability of selection

In equal probability sample design, is the same for all

Stratified random sampling

is a variable defining strata. Suppose Stratum has units in total, for

In Stratum , stratified random sampling takes a simple random sample of units

The distribution of under stratified random sampling is

Example: estimating population mean

An unbiased estimate is the stratified sample mean where is the sample mean in stratum

Sampling variance approximation where is the sample variance of in stratum

A large sample 95% confidence interval for is

Weighting methods

Main idea: A unit selected with probability is “representing” units in the population, hence should be given weights .

For example, in stratified random sample

- A selected unit in stratum represents population units

- Thus by Horvitz-Thompson estimate, the population mean can be estimated by the weighted sum

- It is not hard to show that

Weighting with nonresponses

If the probability of selecting unit is , and the probability of response for unit is , then

Suppose there are units observed (respondents). Then the weighted estimate for is

Usually is unknown and thus needs to be estimated

Weighting class estimator

Weighting class adjustments are used primarily to handle unit nonresponse

Suppose we partition the sample into “weighting classes”. In the weighting class :

- : the sample size

- : number of observed samples

- A simple estimator for is

For equal probability designs, where is constant, the weighting class estimator is where is the respondent mean in class

The estimate is unbiased under the following form of MAR assumption (Quasirandomization): data are MCAR within weighting class

More about weighting class adjustments

Pros: handle bias with one set of weights for multivariate

Cons: weighting is inefficient and can increase in sampling variance, if is weakly related to the weighting class variable

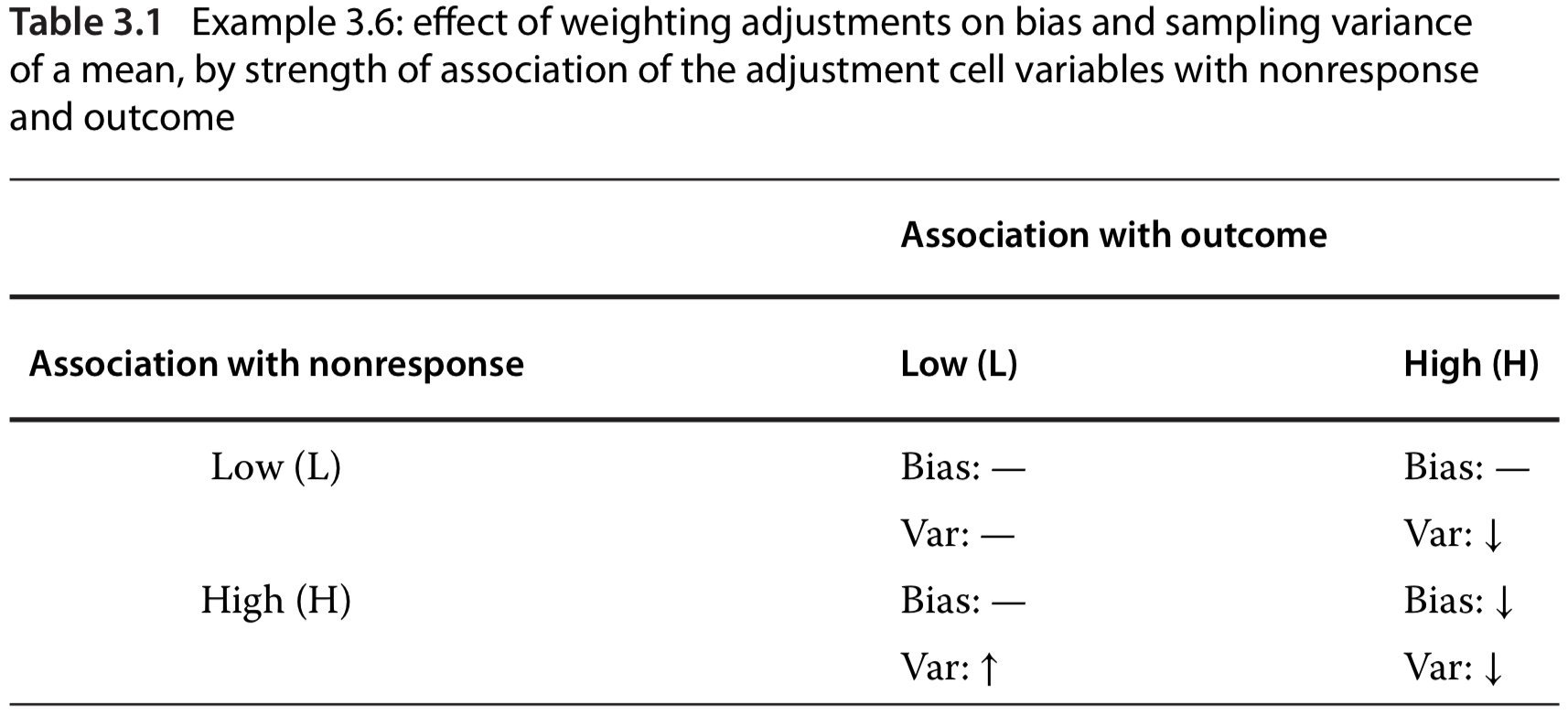

How to choose weighting class adjustments: weighting is only effective for outcomes () that are associated with the adjustment cell variable (). See the right column in the table below.

Propensity weighting

The theory of propensity scores provides a prescription for choosing the coarsest reduction of to a weighting class variable so that quasirandomization is roughly satisfied

Let denote the variables observed for both respondents and nonrespondents

Suppose data are MAR, with being unknown parameters about missing mechanism Then quasirandomization is satisfied when is chosen to be

Response propensity stratification

Define response propensity for unit as i.e., respondents are a random subsample within strata defined by the propensity score

Usually is unknown. So a practical procedure is

- Estimate from a binary regression of on , based on respondent and nonrespondent data

- Let be a grouped variable by coarsening into 5 or 10 values

Thus, within the same adjustment class, all respondents and nonrespondents have the same value of the grouped propensity score

An alternative procedure: propensity weighting

An alternative procedure is to weight respondents directly by the inverse propensity score

This method removes nonresponse bias

But it may yield estimates with extremely high sampling variance because respondents with very low estimated response propensities receive large nonresponse weights

Also, weighting directly by inverse propensities place may reliance on correct model specification of the regression of on

Example: inverse probability weighted generalized estimating equations (GEE)

Let be covariates of GEE, and be a fully observed vector that can predict missing mechanism

If , then the unweighted completed case GEE is unbiased

If , then the inverse probability weighted GEE is unbiased where is the probability of being a complete unit, based on logistic regression of on

Poststratification

The weighting class estimator uses the sample proportion to estimate the population proportion .

If from an external resource (e.g., census or a large survey), we know the population proportion of weighting classes, then we can use the post stratified mean to estimate :

Summary of weighting methods

Weighted CC estimates are often simple to compute, but the appropriate standard errors can be hard to compute (even asymptotically)

Weighting methods treat weights as fixed and known, but these nonresponse weights are computed from observed data and hence are subject to sampling uncertainty

Because weighted CC methods discard incomplete units and do not provide an automatic control of sampling variance, they are most useful when

- Number of covariates is small, and

- Sample size is large

Available-Case Analysis

Available-case (AC) analysis

Available-case analysis: for univariate analysis, include all unites where that variable is present

- Sample changes from variable to variable according to the pattern of missing data

- This is problematic if not MCAR

- Under MCAR, AC can be used to estimate mean and variance for a single variable

Pairwise AC: estimates covariance of and based on units where both and are observed

- Pairwise covariance estimator: where is the set of units with both and observed

Problems with pairwise AC estimators on correlation

Correlation estimator 1:

- Problem: it can lie outside of

Correlation estimator 2 corrects the previous problem:

Under MCAR, all these estimators on covariance and correlation are consistent

However, when , both correlation estimators can yield correlation matrices that are not positive definite!

- An extreme example:

Compare CC and AC methods

When data is MCAR and correlations are mild, AC methods are more efficient than CC

When correlations are large, CC methods are usually better

References

- Little, R. J., & Rubin, D. B. (2019). Statistical Analysis with Missing Data, 3rd Edition. John Wiley & Sons.