For the pdf slides, click here

Notations: in binary classification

We are interested in fitting a model for the true conditional class 1 probability

Two types of problems

- Classification: estimating a region of the form

- Class probability estimation: approximate , by fitting a model , where are parameters to be estimated

Surrogate criteria for estimation, e.g.,

- Log-loss:

- Squared error loss:

Surrogate criteria of classification are exactly the primary criteria of class probability estimation

Proper Scoring Rules

Proper scoring rule

Fitting a binary model is to minimize a loss function

In game theory, the agent’s goal is to maximize expected score (or minimize expected loss)

- A scoring rule is proper if truthfulness maximizes expected score

- It is strictly proper if truthfulness uniquely maximizes expected score

In the context of binary response data, Fisher consistency holds pointwise if

Fisher consistency is the defining property of proper scoring rules

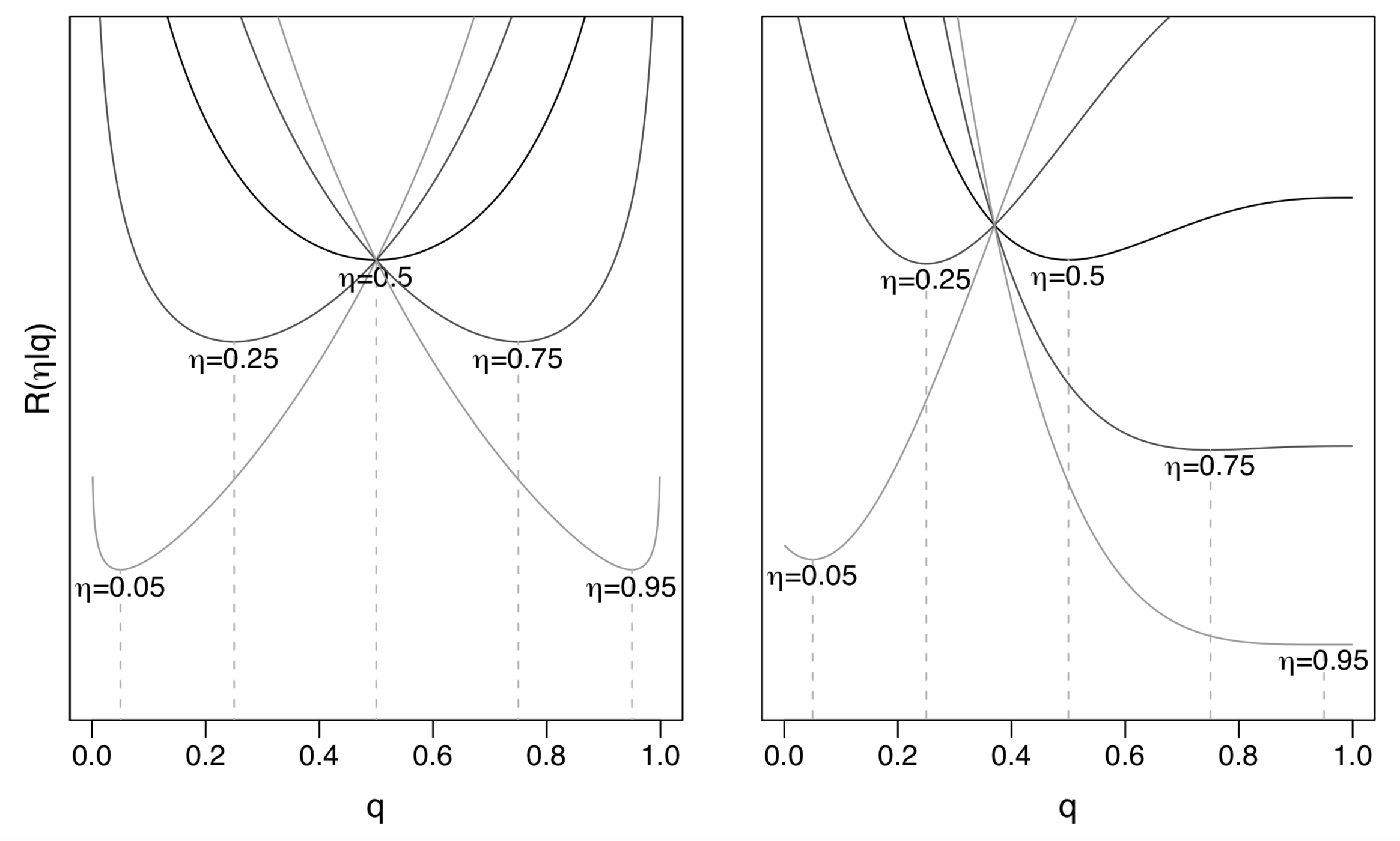

Visualization of two proper scoring rules

- Left: log-loss, or Beta loss with

Right: Beta loss with

- Tailored for classification with false positive cost and false negative cost

Commonly Used Proper Scoring Rules

How to check property of a scoring rule for binary response data

Suppose the partial losses are smooth, then the proper scoring rule property implies

Therefore, a scoring rule is proper if

A scoring rule is strictly proper if

Log-loss

Log-loss is the negative log likelihood of the Bernoulli distribution

Partial losses for log-loss

Expected loss for log-loss

Log-loss is a strictly proper scoring rule

Squared error loss

Squared error loss is also known as Brier score

Partial losses for squared error loss

Expected loss for squared error loss

Squared error loss is a strictly proper scoring rule

Misclassification loss

Usually, misclassification loss uses as the cutoff

Partial losses for misclassification loss

Expected loss for misclassification loss

Since any for events and any for non-events minimize the misclassification loss, misclassification loss is a proper score rule, but it is not strictly proper

A counter-example of proper scoring rule: absolute loss

Because , the absolute deviation becomes

Absolute deviation is not a proper scoring rule, because is minimized by for , and for

Structure of Proper Scoring Rules

Structure of proper scoring rules

Theorem: Let be a positive measure on that is finite on intervals . Then the following defines a proper scoring rule:

The proper scoring rule is strict iff has non-zero mass on every open interval of (0, 1)

The fixed limits and are somewhat arbitrary

Note that for log-loss, is unbounded (goes to infinity) below near , and is unbounded below near

Except for log-loss, all other common proper scoring rules seem to satisfy

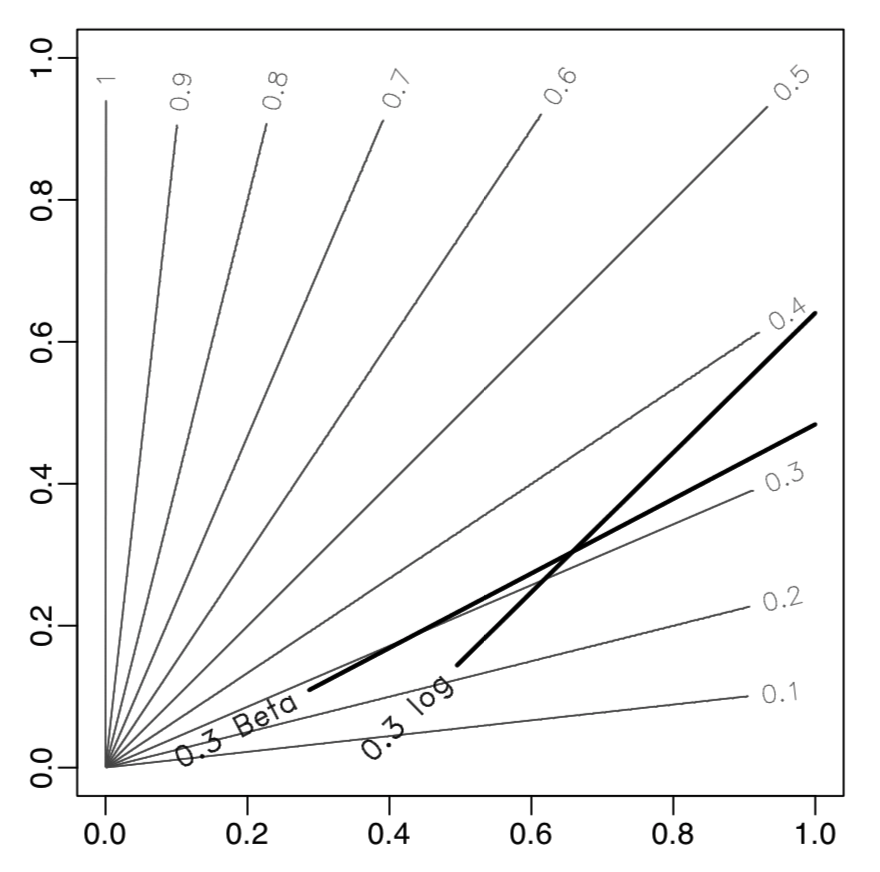

Proper scoring rules are mixtures of cost-weighted misclassification losses

Connection between the false positive (FP) / false negative (FN) costs and the classification cutoff

Suppose the costs of FP and FN sum up to 1:

- FP: has a cost , and expected cost

- FN: has a cost , and expected cost

The optimal classification is therefore class 1 iff

- Since we don’t know the truth , we classify as class 1 when

Therefore, the classification cutoff equals

- Standard classification assumes costs of FP and FN are the same, so the classification cutoff is

Cost-weighted misclassification errors

Cost-weighted misclassification errors:

Shuford-Albert-Massengil-Savage-Schervish theorem: an intergral representation of proper scoring rules

- The second equality holds if is absolutely continuous wrt Lebesgue measure

- This can be used to tailor losses to specific classification problems with cutoffs other than of , by designing suitable weight functions

The paper proposes to use Iterative Reweighted Least Squares (IRLS) to fit linear models with proper scoring rules

Beta Family of Proper Scoring Rules

Beta family of proper scoring rules

This paper introduced a flexible 2-parameter family of proper scoring rules

Loss function of the Beta family proper scoring rules

- See the definitions of and in the next page

Log-loss and squared error loss are special cases

- Log-loss:

- Squared error loss:

- Misclassification loss:

Special functions and Python / R implementations

Beta function

- Python implementation:

scipy.special.beta(a,b) - R implementation:

beta(a, b)

- Python implementation:

Incomplete Beta function

- Python implementation:

scipy.special.betainc(a, b, x) - R implementation:

pbeta(x, a, b)

- Python implementation:

Tailor proper scoring rules for cost-weighted misclassification

We can use when FP and FN costs are not viewed equal

Since Beta family proper scoring rule is like adding a Beta distribution on the FP cost , we can use mean/variance matching to elicit and

Alternatively, we can match the mode

Examples

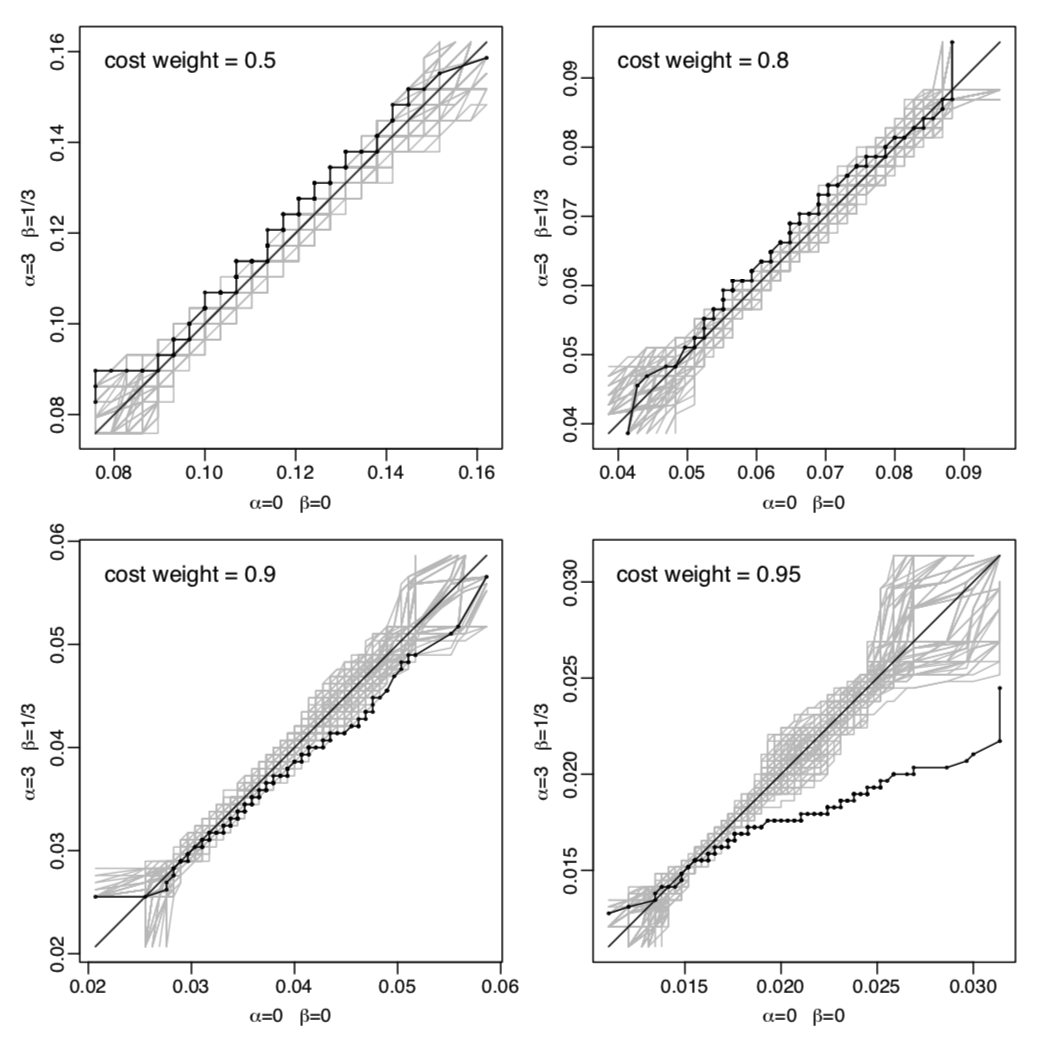

A simulation example

In the simulation data with bivariate , where decision boundaries of different are not in parallel (grey lines)

The logit link Beta family linear model with estimates the classification boundary better than the logistic regression

On the Pima Indians diabetes data

Comparing logistic regression with a proper scoring rule tailored for high class 1 probabilities: .

Black lines: empirical QQ curves of 200 cost-weighted misclassification costs computed on randomly selected test sets

References

Buja, A., Stuetzle, W., & Shen, Y. (2005). Loss functions for binary class probability estimation and classification: Structure and applications. Working draft, November, 3. http://www-stat.wharton.upenn.edu/~buja/PAPERS/paper-proper-scoring.pdf

For a game theory definition of proper scoring rule, see https://www.cis.upenn.edu/~aaroth/courses/slides/agt17/lect23.pdf

Fitting linear models with custom loss functions in Python: https://alex.miller.im/posts/linear-model-custom-loss-function-regularization-python/

Fitting XGBoost with custom loss functions in Python: https://xgboost.readthedocs.io/en/latest/tutorials/custom_metric_obj.html