For the pdf slides, click here

Overview on CNN and RNN for NLP

CNN and RNN architectures explored in this part of the book are primarily used as feature extractors

CNNs and RNNs as Lego bricks: one just needs to make sure that input and output dimensions of the different components match

Ch13 Ngram Detectors: Convolutional Neural Networks

CNN Overivew

CNN overview for NLP

CBOW assigns the following two sentences the same representations

- “it was not good, it was actually quite bad”

- “it was not bad, it was actually quite good”

Looking at ngrams is much more informative than looking at a bag-of-words

This chapter introduces the convolution-and-pooling (also called convolutional neural networks, or CNNs), which is tailored to this modeling problem

Benefits of CNN for NLP

CNNs will identify ngrams that are predictive for the task at hand, without the need to pre-specify an embedding vector for each possible ngram

CNNs also allows to share predictive behavior between ngrams that share similar components, even if the exact ngrams was never seen at test time

Example paper: link

Basic Convolution Pooling

Convolution

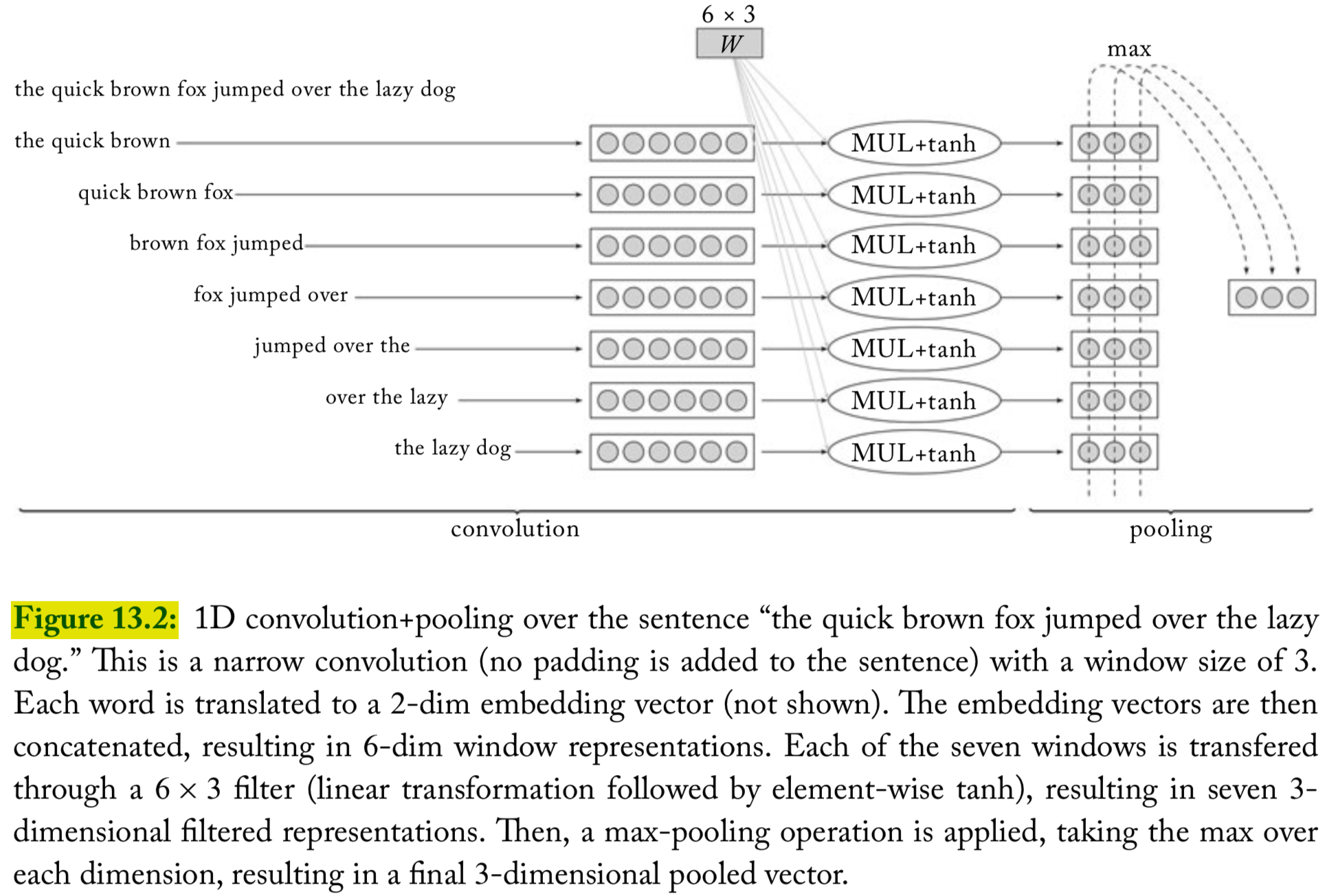

The main idea behind a convolution and pooling architecture of language tasks is to apply a non-linear (learned) function over each instantiation of a -word sliding window over the sentence

This function (also called “filter”) transforms a window of words into a scalar value

- Intuitively, when the sliding window of size is run over a sequence, the filter function learns to identify informative grams

Several such filters can be applied, resulting in dimensional vector (each dimension corresponding to one filter) that captures important properties of the words in the window

Pooling

Then a “pooling” operation is used to combine the vectors resulting from the different windows into a single -dimensional vector, by taking the max or the average value observed in each of the dimensions over the different windows

- The intention is to focus on the most important “features” in the sentence, regardless of their location

The resulting -dimensional vector is then fed further into a network that is used for prediction

1D convolutions over text

A filter is a dot-product with a weight vector parameter , which is often followed by nonlinear activation function

Define the operation to be the concatenation of the vectors . The concatenated vector of the th window is then

Apply the filter to each window-vector, resulting scalar value :

Joint formulation of 1D convolutions

- It is customary to use different filters , which can be arranged into a matrix , and a bias vector is often added

Ideally, each dimension captures a different kind of indicative information

The main idea behind the convolution layer: to apply the same parameterized function over all grams in the sequence. This creates a sequence of vectors, each representing a particular gram in the sequence

Narrow vs wide convolutions

For a sentence of length with a window of size

Narrow convolutions: there are positions to start the sequence, and we get vectors

Wide convolutions: an alternative is to pad the sentence with padding-words to each side, resulting in vectors

We use to denote the number of resulting vectors

Vector pooling

Applying the convolution over the text results in vectors , each

These vectors are then combined (pooled) into a single vector representing the entire sequence

During training, the vector is fed into downstream network layers (e.g., an MLP), culminating in an output layer which is used for prediction

Different pooling methods

Max pooling: the most common, taking the maximum value across each dimension

- The effect of the max-pooling operation is to get the most salient information across window positions

Average pooling

-max pooling: the top values in each dimension are retained instead of only the best one, while preserving the order in which they appeared in the text

An illustration of convolution and pooling

Variations

Rather than a single convolutional layer, several convolutional layers may be applied in parallel

For example, we may have four different convolutional layers, each with a different window size in the range 2-5, capturing gram sequences of varying lengths

Hierarchical Convolutions

Hierarchical convolutions

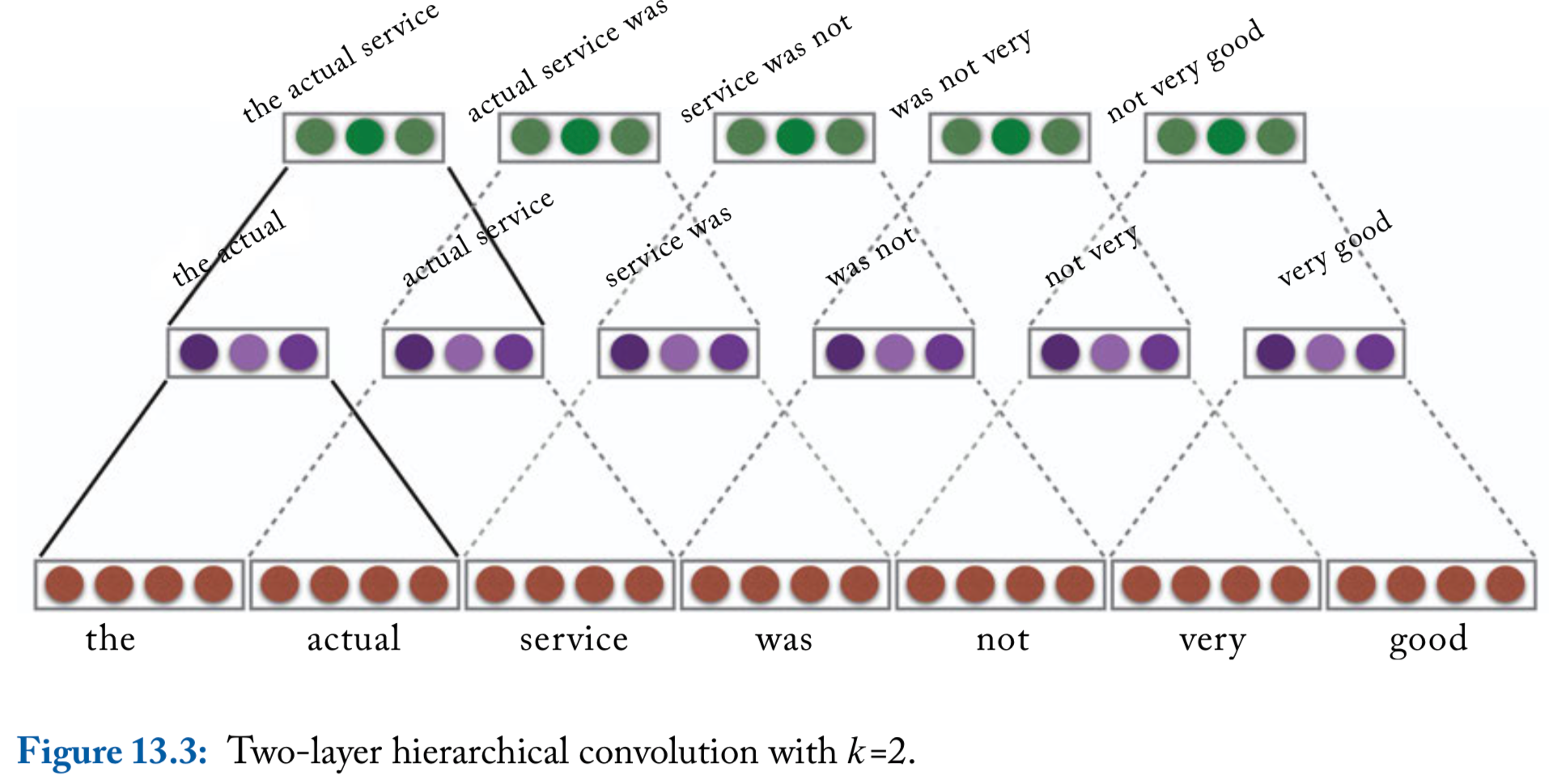

- The 1D convolution approach described so far can the thought of as a ngram detector: a convolution layer with a window of size is learning to identify indicative -gram in the input

- We can extend this into a hierarchy of convolutional layers with layers that feed into each other

Hierarchical convolutions, continued

For layers with a window of size , each vector will be sensitive to a window of words

Moreover, the vector can be sensitive to gappy-ngrams of works, potentially capturing patterns such as “not ___ good” or “obvious ___ predictable ___ plot”, where ___ stands for a short sequence of words

Strides

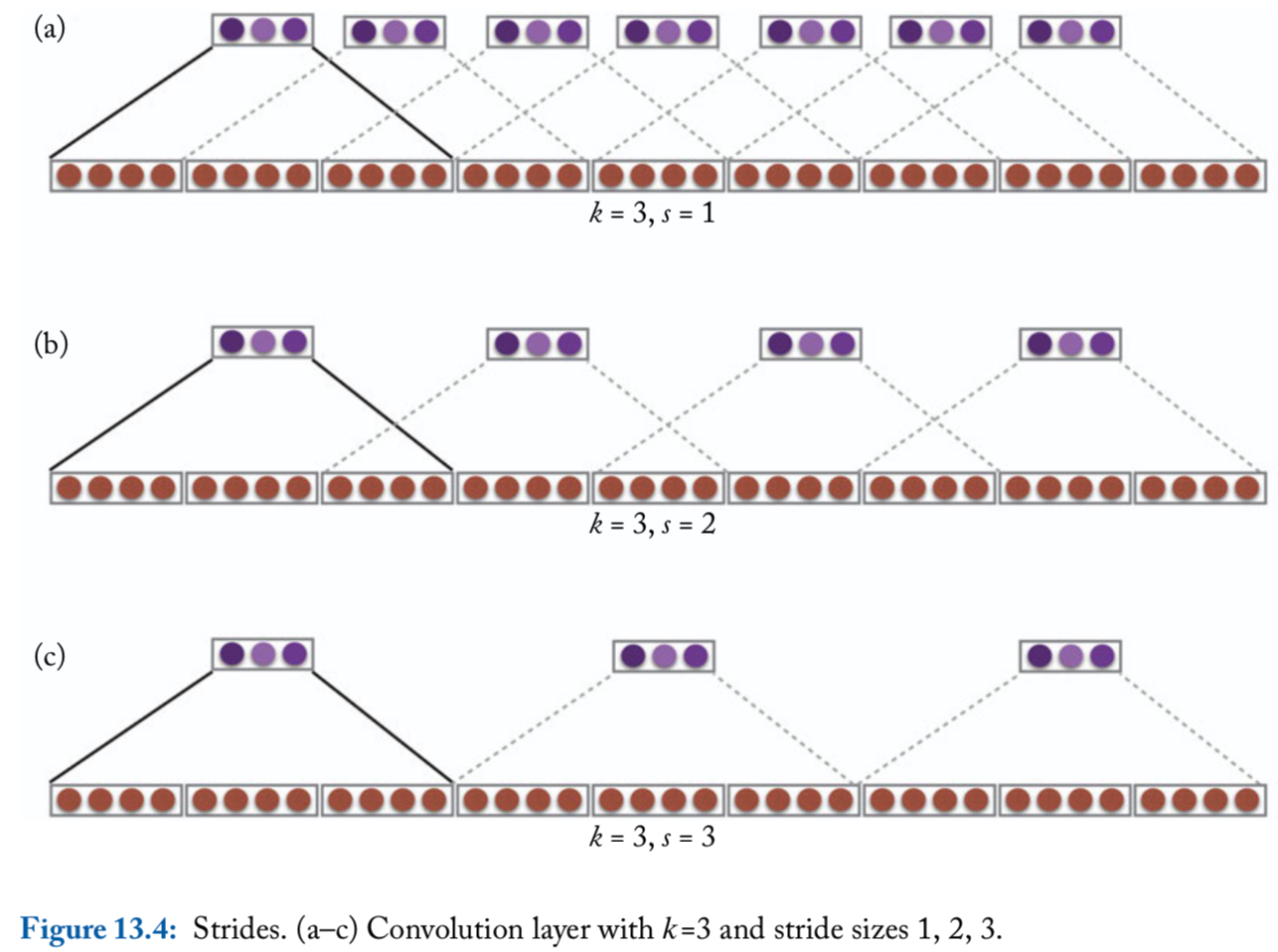

So far, the convolution operation is applied to each -word window in the sequence, i.e., windows starting at indices . This is said to have a stride of size

Larger strides are also possible. For example, with a stride of size , the convolution operation will be applied to windows starting at indices

Convolution with window size and stride size :

An illustration of stride size

References

- Goldberg, Yoav. (2017). Neural Network Methods for Natural Language Processing, Morgan & Claypool