For the pdf slides, click here

Inverse Probability of Treatment Weighting

Motivating example

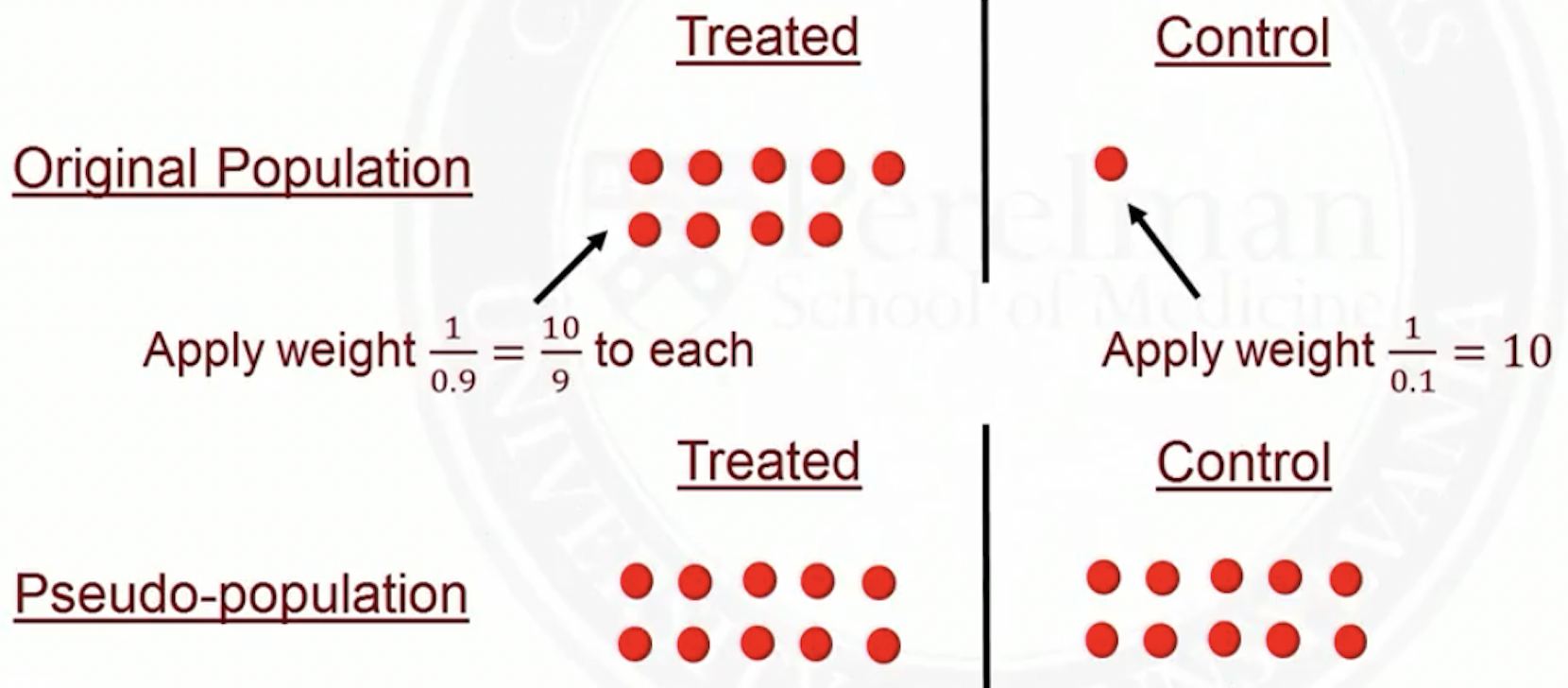

- Suppose there is a single confounder , with propensity scores

In propensity score matching, for subjects with , 1 out of 9 controls will be matched to the treated

Thus, 1 person in the treated group counts the same as 9 people from the control group

So rather than matching, we could use all data, but down-weight each control subject to be just 1/9 of the treated subject

Inverse probability of treatment weighting (IPTW)

IPTW weights: inverse of the probability of treatment received

- For treated subjects, weight by

- For control subjects, weight by

In the previous example

For , the weight for a treated subject is , and the weight for a control subject is

For , the weight for a treated subject is , and the weight for a control subject is

Motivation: in survey sampling, it is common to oversample some subpopulation, and then use Horvitz-Thompson estimator to estimate population means

Pseudo population

IPTW creates a pseudo-population where treatment assignment no longer depend on

- So there is no confounding in the pseudo-population

In the original population, some people were more likely to get treated based on their ’s

In the pseudo-population, everyone is equally likely to get treated, regardless of their ’s

Estimation with IPTW

We can estimate as below

- where is the propensity score

- The numerator is the sum of ’s in treated pseudo-population

- The denominator is the number of subjects in treated pseudo-population

We can estimate as below

Average treatment effect:

Marginal Structural Models

Marginal structural models

Marginal structural models (MSM): a model for the mean of the potential outcomes

Marginal: not conditional on the confounders (population average)

Structural: for potential outcomes, not observed outcomes

Linear MSM and logistic MSM

Linear MSM

- ,

- So the average causal effect

Logistic MSM

- So the causal odds ratio

MSM with effect modification

Suppose is a variable that modifies the effect of

A linear MSM with effect modification

- So the average causal effect

- General MSM

- : link function

- : a function specifying parametric from of and (typically additive, linear)

MSM estimation using pseudo-population

Because of confounding, MSM is difference from GLM (generalized linear model)

Pseudo-population (obtained from IPTW) is free of confounding

- We therefore estimate MSM by solving GLM with IPTW

MSM estimation steps

Estimate propensity score, using logistic regression

Create weights

- Inverse of propensity score for treated subjects

- Inverse of one minus propensity score for control subjects

Specify the MSM of interest

Use software to fit a weighted generalized linear model

Use asymptotic (sandwich) variance estimator

- This accounts for fact that pseudo-population might be larger than sample size

Bootstrap

We may also use bootstrap to estimate standard error

Bootstrap steps

Randomly sample with replacement from the original sample

Estimate parameters

Repeat steps 1 and 2 many times

Use the standard deviation of the bootstrap estimates as an estimate of the standard error

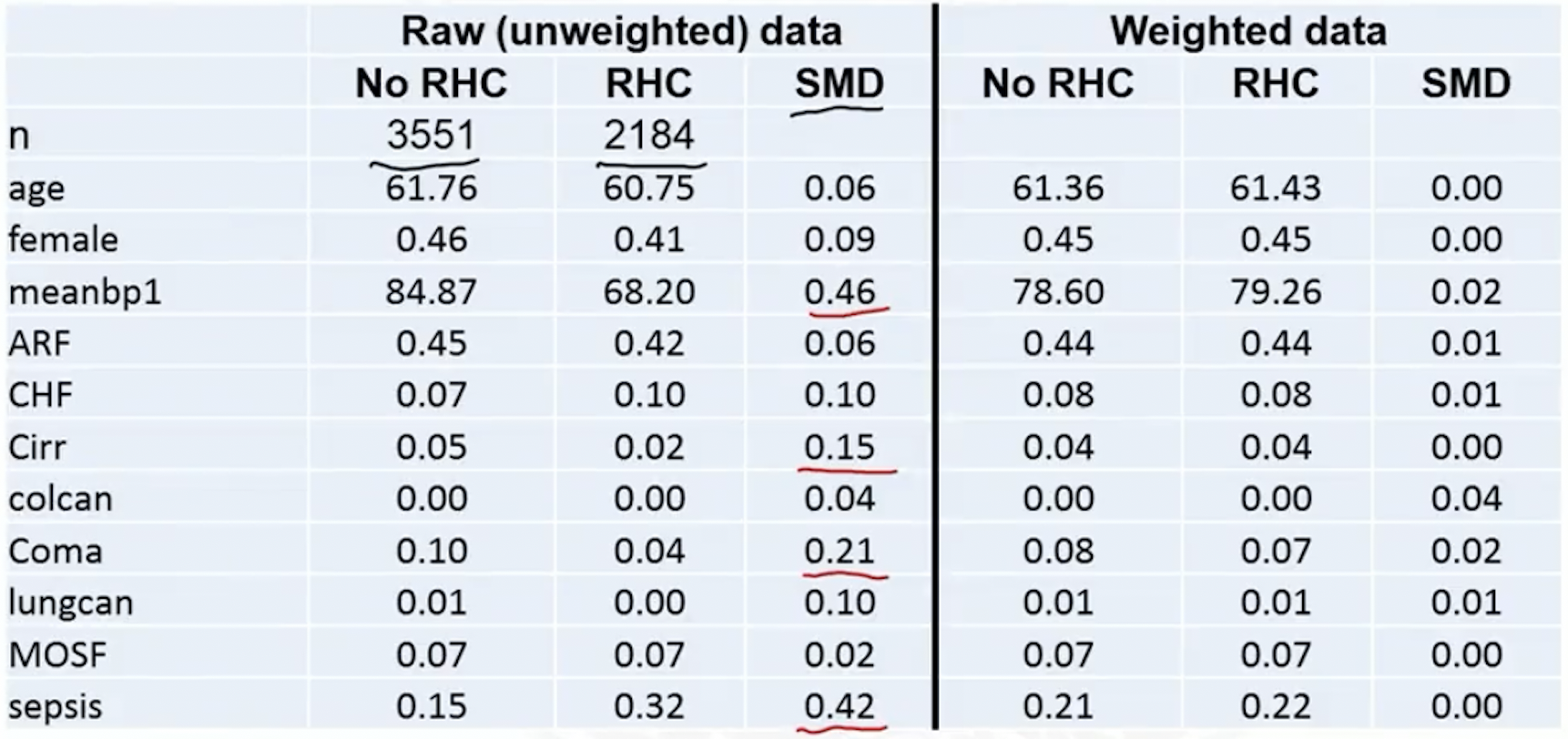

Assessing covariate balance with weights

Covariate balance check with standardized differences

Covariate balance: can be checked on the weighted sample using standardized difference

- Weighted means ,

- Weighted variances ,

Balance check tools

- Table 1

- SMD plot

If imbalance after weighting

Refine propensity score model

- Interactions

- Non-linearity

Then reaccess balance

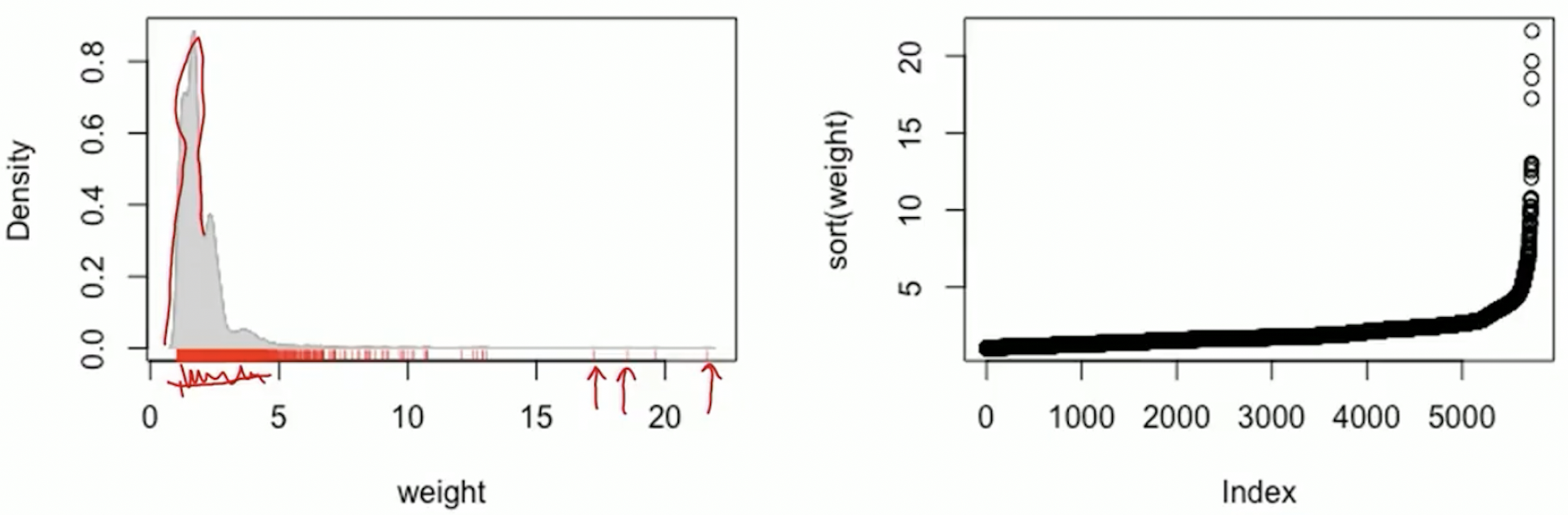

Problems and remedies for large weights

Larger weights lead to more noise

For an object with a large weight, its outcome data can greatly affect parameter estimation

An object with large weight can also affect standard error estimation, via bootstrap, depending on whether the object is selected or not

An extremely large weights means the probability of that treatment is very small, thus a potential violation of the positivity assumption

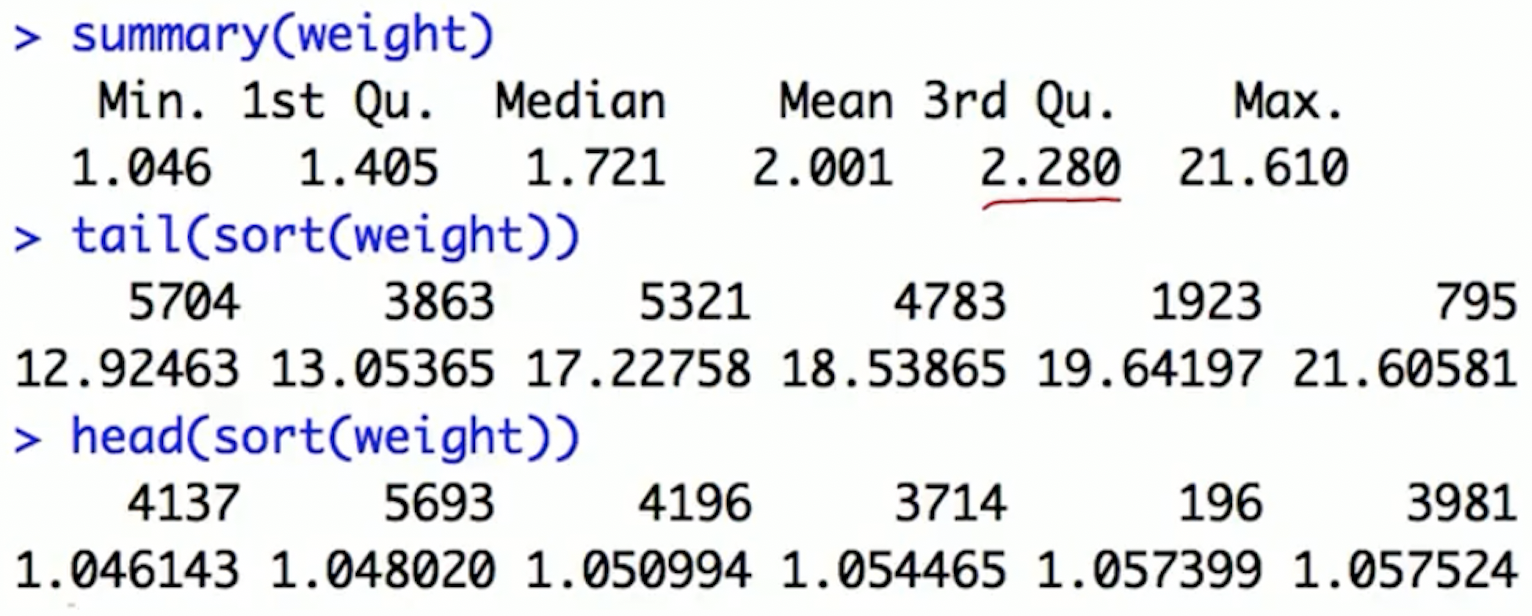

Check weights via plots and summary statistics

- Investigate very large weights: identify the subjects with large weights and find what’s unusual about them

Option 1: trimming the tails

Large weights: occur in the tails of the propensity score distribution

Trim the tails to eliminate some extreme weights

- Remove treated subjects whose propensity scores are above the 98th percentile from the distribution among controls

- Remove control subjects whose propensity scores are below the 2nd percentile from the distribution among treated

Note: trimming the tails changes the population

Option 2: truncating the weights

Another option to deal with large weights is truncation

Weight truncation steps

- Determine a maximum allowable weight

- Can be a specific value (e.g., 100)

- Can based on a percentile (e.g., 99th)

- If a weight is greater than the maximum allowable, set it to the maximum allowable value

- Bias-variance trade-off

- Truncation: bias, but smaller variance

- No truncation: unbiased, larger variance

Truncating extremely large weights can result in estimators with lower MSE

References

Coursera class: “A Crash Course on Causality: Inferring Causal Effects from Observational Data”, by Jason A. Roy (University of Pennsylvania)